Three reasons why you shouldn’t use machine translation for French

But if you must, at least run it through Fairslator.

You know how it is: you want to say something in French but you’re not quite sure how, so you rush to a machine translation tool such as Google Translate or DeepL.

Yes, machine translators have become really good in recent years, especially in high-profile language pairs such as English-French. But don’t let that lull you into a false sense of security. No matter how good the AI gets, there will always be occasions when the machine has understood something differently – and therefore translated it differently – from how you meant it.

This article will show you three examples of that, explain why they happen, and tell you how Fairslator can keep you safe from those traps.

1. What do you mean by ‘you’?

You probably know that French has two words you ‘you’: tu refers to one person and vous refers to several people. Vous can also refer to one person if you’re addressing him or her formally, while tu is informal.

You know this, but does Google Translate know it? Not really. Ask Google Translate (or any other machine translator) to translate ‘I like you’ and it will pick tu or vous more or less arbitrarily. What if it picks tu but you wanted to say this sentence to a group of people?

To prevent that from happening, the trick is not to use Google Translate directly but to use it through Fairslator. Fairslator is a tool which obtains translations from an external machine translator and then puts them through an ambiguity detection filter. When it detects that more than one translation is possible – like in this case with ‘you’ – it warns you about it and gives you a choice: do you want to to translate ‘you’ as single person or multiple people, as formal or informal? Depending on what you answer Fairslator re-inflects the translation.

2. Student, director and other gender-specific nouns

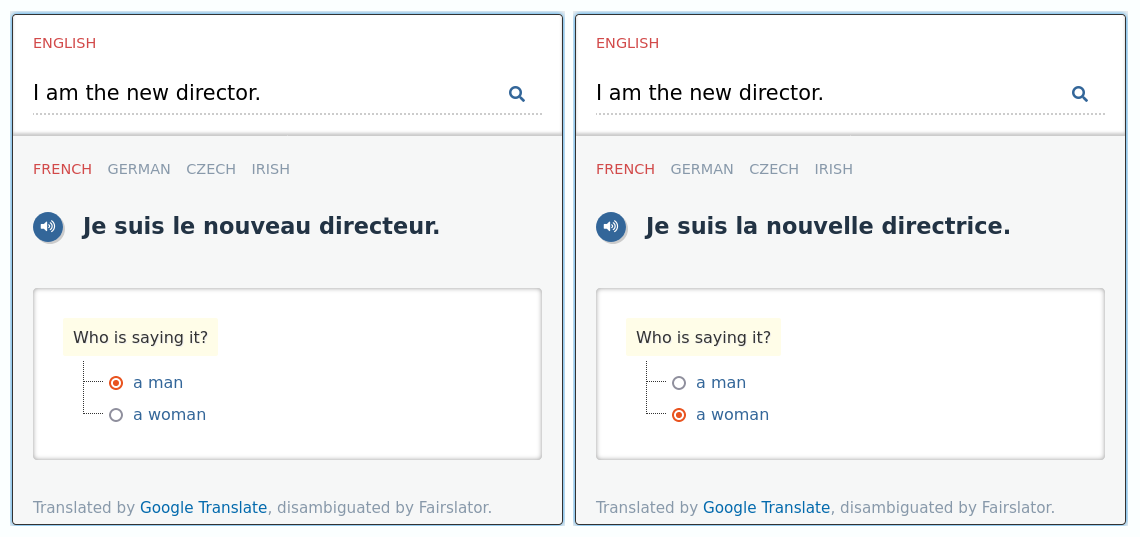

Enough about ‘you’, let’s talk about other people. French is one of the many European languages that have gender-specific words for occupations. Where English has ‘student’ French has étudiant for male student and étudiante for female student. Where English has ‘director’ French has directeur for male director and directrice for female director.

This is another stumbling block for machine translators. If you ask them to translate a sentence with one of these words in it, such as ‘I am the new director’, it will probably translate that with the male version, directeur. The machine is biased in favour of male directors because it has seen more male than female directors in its training data. But what if you’re a woman? Then announcing to people that you are their new directeur (instead of directrice) is going to make you sound a bit silly.

The trick is, again, not to use machine translation directly but to go through Fairslator. Fairslator will detect that there are two gender-specific translations for ‘director’ and ask you which one you want.

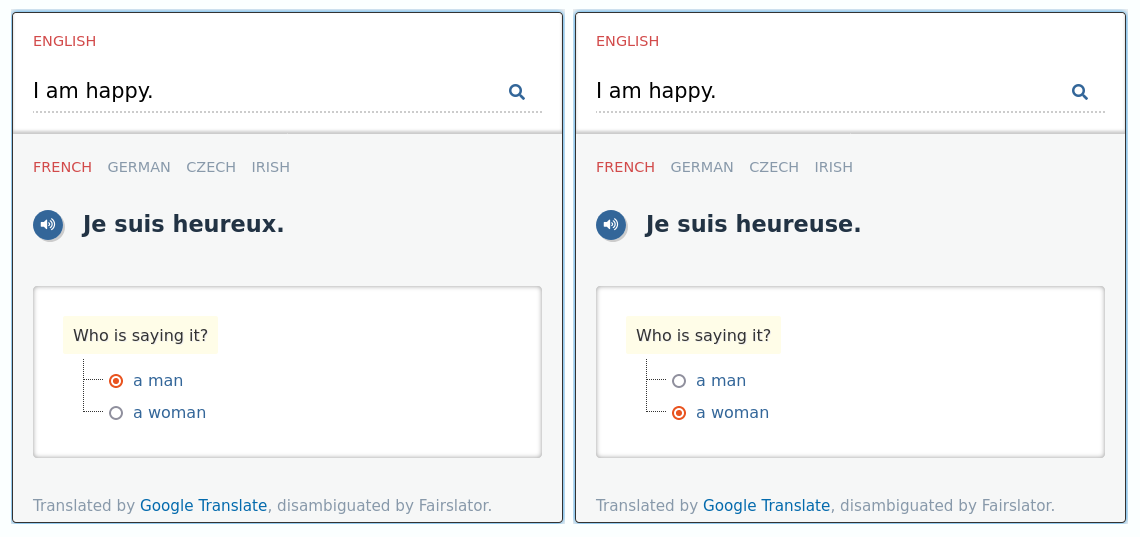

3. Happy as a man, happy as a woman

And it gets worse: the same gender-specificity affects adjectives too, if they are positioned after the verb être (‘to be’). This means that even a simple sentence like ‘I am happy’ has two possible translations for ‘happy’, depending on whether the person that ‘I’ refers to is male or female: je suis heureux if male, je suis heureuse if female.

Again, machine translators usually jump to a conclusion and assume that the person under ‘I’ must be male. To prevent that from happening, use Fairslator instead.

En conclusion...

These have been just three examples of situations where machine translators often fail to deliver the correct translation. They fail because they jump to conclusions too quickly: they assume they now know better than you what you mean by certain words. Fairslator is a plug-in for many popular machine translators which reverse-engineers these assumptions and hands control back to you, the human user.

So, tell all your French-speaking friends about Fairslator – but don’t forget to use the correct translation for ‘you’!

Michal Měchura

Michal Měchura